The Big Data Department Makes Progress in Research on Pre-trained Models for Academic Services

In the research on the transferable pre-training model on the heterogeneous data of researchers, QIAO Ziyue, a PhD student from the Department of Big Data, supported by Prof. ZHOU Yuanchun, proposed a pre-training model named Researcher data Pre-Training (RPT) based on multi-task self-supervised learning, which can be transferred to multiple researcher data mining and analyzing tasks to improve the quality and intelligence of academic services. The research paper was accepted by the international journal IEEE Transactions on Big Data.

With the development of the academic search engines, the mining and analysis acquisition of massive researcher data, such as collaborator recommendation and researcher retrieval, has become indispensable for improving the quality and intelligence of services. However, most of the existing studies for researcher data mining focus on a single task for a particular application scenario and learning a task-specific model that are usually not transferable to out-of-scope tasks. The pre-training techniques provides a generalized and sharing model to capture valuable information from enormous unlabeled data. The model can accomplish multiple downstream tasks via a few fine-tuning steps.

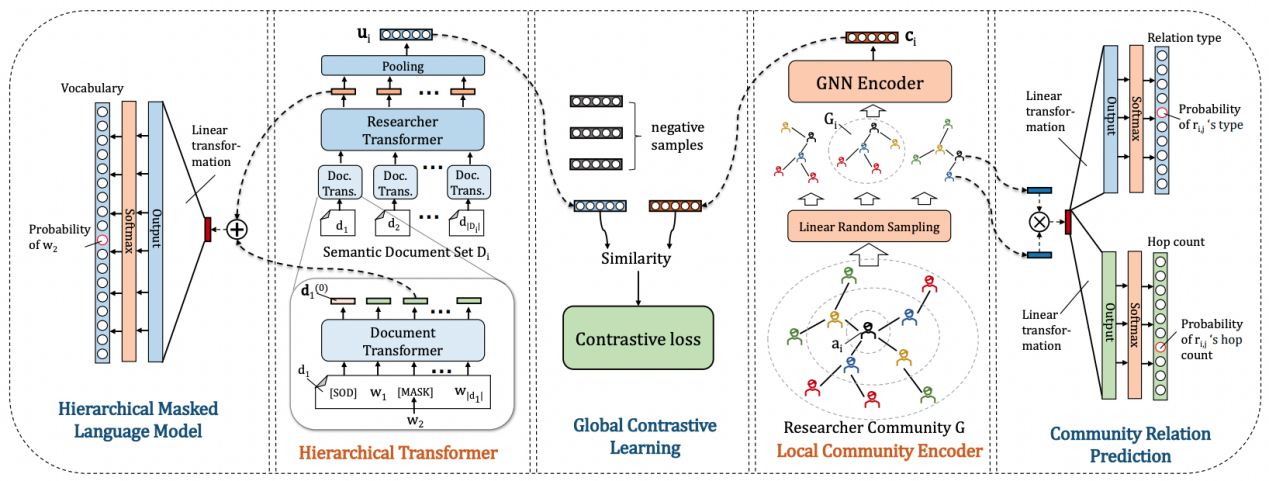

Based on this, this study proposes a multi-task self-supervised learning-based researcher data pre-training model named RPT in considering heterogeneity, generalization, scalability, and transferability, which is efficient to accomplish multiple researcher data mining tasks. Specifically, RPT divides the researchers’ data into semantic document sets and community graph, then uses the hierarchical Transformer and the local community encoder to capture information from the two categories of data, respectively. RPT has three self-supervised learning objectives, including a global contrastive learning model, a hierarchical masked language model, and a community relation prediction model. There are two transfer modes of RPT for fine-tuning in different scenarios. Extensive experiments are conducted to evaluate RPT, results on three downstream tasks verify the effectiveness of pre-training for researcher data mining.

For details, please contact QIAO Ziyue(qiaoziyue@cnic.cn)